Neural Non-Rigid Tracking

2020

Conference Paper

ncs

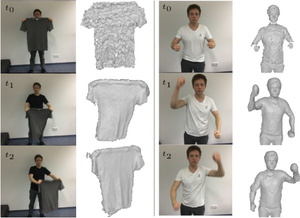

We introduce a novel, end-to-end learnable, differentiable non-rigid tracker that enables state-of-the-art non-rigid reconstruction. Given two input RGB-D frames of a non-rigidly moving object, we employ a convolutional neural network to predict dense correspondences. These correspondences are used as constraints in an as-rigid-as-possible (ARAP) optimization problem. By enabling gradient back-propagation through the non-rigid optimization solver, we are able to learn correspondences in an end-to-end manner such that they are optimal for the task of non-rigid tracking. Furthermore, this formulation allows for learning correspondence weights in a self-supervised manner. Thus, outliers and wrong correspondences are down-weighted to enable robust tracking. Compared to state-of-the-art approaches, our algorithm shows improved reconstruction performance, while simultaneously achieving 85x faster correspondence prediction than comparable deep-learning based methods.

| Author(s): | Bozic, Aljaz and Palafox, Pablo and Zollöfer, Michael and Dai, Angela and Thies, Justus and Nießner, Matthias |

| Book Title: | NeurIPS |

| Year: | 2020 |

| Department(s): | Neural Capture and Synthesis |

| Bibtex Type: | Conference Paper (inproceedings) |

| URL: | https://justusthies.github.io/posts/neuraltracking/ |

| Links: |

Paper

Video |

| Video: | |

|

BibTex @inproceedings{bozic2020neuraltracking,

title = {Neural Non-Rigid Tracking},

author = {Bozic, Aljaz and Palafox, Pablo and Zoll{\"o}fer, Michael and Dai, Angela and Thies, Justus and Nie{\ss}ner, Matthias},

booktitle = {NeurIPS},

year = {2020},

doi = {},

url = {https://justusthies.github.io/posts/neuraltracking/}

}

|

|